Although artificial intelligence (AI) and machine learning (ML) have been widely used in the capital markets sector since the 2000s, the emergence of generative AI (GenAI) within the last 18 months has spurred a significant increase in investment in AI tools and technologies. This trend is set to continue as AI is deployed and utilised in increasingly innovative ways across the front, middle and back office.

In this article, we explore the current state, challenges, and future directions of AI and ML in capital markets, with insights from four industry experts who will be speaking at A-Team Group’s upcoming AI in Capital Markets Summit London.

The Current Landscape: GenAI and Internal Efficiency

GenAI – and large language models (LLMs) such as GPT-4 in particular – have certainly made a significant impact on the direction of technology projects in capital markets firms. But where is the work actually being focused?“Many individuals face pressure from senior management to take action in response to the wave of GenAI, as no one wants to be left behind,” says Theo Bell, Head of AI Product at Rimes, a provider of enterprise data management and investment management solutions. “However, the levels of maturity vary significantly. Most firms are focusing on internal efficiency rather than the investment process itself, utilising tools like Microsoft 365 and Github co-pilots to streamline workflows, whether that’s for writing code, emails, or generating documents.”

Bell indicates that some firms are further ahead than others in their utilisation of GenAI, having developed front-office co-pilots for broker research, for example. “Some are automating tasks such as market analysis and performance attribution reporting, or – like us – are building co-pilots using their own data to answer questions like, ‘What is the top ETF by AUM?’ or ‘What are my holdings in Apple?’”

Nathan Marlor, Head of Data and AI at Version 1, an IT services and solutions vendor specialising in digital transformation, agrees that the current focus of GenAI is primarily on productivity gains. “It automates the mundane aspects of our jobs,” he says. “Within financial services, GenAI’s impact might not be as significant as in other industries as the use cases differ. The primary application of AI in financial services remains as it has always been,” he says, “using machine learning models to make market predictions, understand trends, and provide insights by combining numerous data sources swiftly.”

Machine Learning vs GenAI

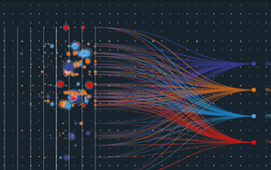

The distinction between machine learning and GenAI is an important one, given their different use cases within capital markets. While they are both subsets of artificial intelligence, they focus on different aspects of AI applications. ML involves algorithms that learn from and make predictions or decisions based on data, improving their performance as they are exposed to more data over time. In contrast, GenAI and LLMs often leverage complex models such as deep neural networks to generate new content that mimics real-world examples. While ML can include predictive analytics, GenAI is distinguished by its ability to create novel, coherent outputs that resemble human-like interactions.

“It’s important to remember that AI and ML have been used in this industry for years and will continue to be valuable, especially in predictive AI. Large language models are not always necessary,” says Bell. “We are beginning to better understand which use cases are suitable for GenAI and which are better served by more traditional methods.”

She continues: “GenAI models are adept at converting text to SQL and running data queries on things like holdings, benchmarks and so on. But I’m keen to discover the next step in workflow automation, not just asking questions about ETFs or holdings for example, but exploring how to enhance the subsequent stages in the trading workflow and progress things up the value chain.”

Reena Raichura, ex-head of product solutions at interop.io and now the founder of Finergise, a fintech advisory company, outlines some concerns regarding the focus (hype?) around GenAI and LLMs over traditional ML methods in the capital markets sector.

“Currently, much attention is on GenAI and LLMs. However, many real-world applications of AI and ML are unrelated to GenAI,” she says. “For instance, behavioural analysis, bond pricing, and liquidity discovery can all utilise machine learning without involving GenAI or ChatGPT. It seems we’re jumping ahead to GenAI & LLMs without fully leveraging machine learning in the trading space. It feels like we’ve skipped a step. There’s still much to achieve with ML for financial institutions and vendors alike. When rolling out new applications or functionality, analysing user behaviour and workflow is essential to ensure effective utilisation and that you are meeting the needs of your clients/users. Understanding what traders and operations staff want is crucial. By analysing user behaviour on platforms, understanding workflows, and identifying the information users seek, we can intelligently prompt users with the right actions and data at the right time as well as better build the GenAI tools.”

Infrastructure and data strategy

One factor behind GenAI’s rapid take-up is that its infrastructure requirements are markedly different from those required for traditional ML techniques, explains Raichura. “People are quick to adopt GenAI because it doesn’t necessarily require modernising the trading stack; it’s about managing vast amounts of data. In contrast, to get the most value from machine learning, modernisation is required and the ability to analyse user interactions across applications. While the enthusiasm for GenAI is understandable, it’s important to consider the foundational work required for effective machine learning.”

Why is it so important to prioritise data quality and management in AI development?

“Data and AI are intrinsically linked, and the adage ‘garbage in, garbage out’ still applies,” says Marlor. “If your data lacks quality and proper lineage, developing any AI solution will be challenging. Positioning data as a first-class citizen is crucial as it underpins all subsequent AI developments. Without the right data at the appropriate sampling rate, you can’t extract relevant features or train models effectively.”

Raichura agrees. “You definitely can’t have an AI strategy without a data strategy. It’s crucial to address how you structure your data. The two strategies must go hand in hand.”

Challenges and Risks: Price, Performance, and Privacy

Various risk factors should be taken into consideration when undertaking a new GenAI project, explains Marlor. “Price, performance, and privacy are the primary risk factors to consider,” he says.

“Regarding price, implementing a new GenAI solution using GPT-4 Turbo or other advanced models may result in unexpectedly high bills if not managed correctly. Costs can quickly escalate, particularly if models are not right-sized for performance. This is a key risk, as the price tag of these solutions is not always fully understood. Deploying open-source models on static cloud infrastructure can help control costs, though it might slow down performance, which is another risk consideration. What do you need for your specific use case? From an engineering perspective, it’s easy to become engrossed in leaderboards and benchmarks. However, benchmarks can sometimes be misleading, so testing a particular model for your specific use case can provide a better understanding of its performance.

There are also privacy risks associated with using public GenAI models in a business environment, says Marlor. “Public models can retrain based on your prompts, potentially exposing your data to the public domain. Private data needs to be managed differently, possibly using local or cloud-based open-source models, or small language models to reduce costs.”

Explainability and Responsible AI

Explainability in AI models is another important consideration, particularly when deploying ‘black box’ type AI/ML models, suggests Harsh Prasad, a Senior Quantitative Researcher in AI/ML.

“When making investment decisions and finding any kind of alpha, random correlations are insufficient, they must be linked to causality,” he contends. “Essentially, when examining your decision-making process, the question becomes: how do you explain the patterns you observe? Do you have adequate tools to provide such explanations? This becomes even more complex in a multi-dimensional context, with numerous variables at play. Determining the exact contribution of each variable becomes crucial, especially when adopting more sophisticated, high-dimensional models. Without being able to identify the source of value, it will be challenging to convince management, regulators, or other stakeholders. Therefore, a discussion about how to explain observed patterns is essential.”

He continues: “Viewing everything through the lens of explainability makes things both interesting and challenging, particularly in terms of making a ‘black box model’ more accessible. In the context of GenAI, this raises questions about how models are validated. It’s fascinating to examine specific use cases, understand what exactly is being done, which techniques are employed, and why. While these techniques might be best-in-class at the moment, they are not necessarily perfect. So, what is the next step in research? Where is further work needed?”

In summary, it’s clear that while GenAI and LLMs like GPT-4 present a wide range of opportunities for advancing trading operations, the foundational elements of machine learning and robust data strategies are critical components for firms who want to realise the full potential of AI technologies. Moving forward, the industry will need to balance the allure of new AI tools with the practical aspects of data quality, model explainability, and infrastructural readiness, not only to enhance operational efficiencies, but also to pave the way for sustainable, value-driven innovations.

To learn more about how AI could benefit your organisation, where you need to invest from a data, technology and workforce perspective, and how to ensure your AI strategy will set your organisation on a path to future success, book your place at the A-Team Group’s AI in Capital Markets Summit today, using the link below.

AI in Capital Markets Summit London

Subscribe to our newsletter